BERT Serverless Deployment with Streamlit (and It’s Free!)

Imagine that you have built an NLP model that you create with all of your heart. You want others to see and use it, right? You tried to take a look at the VPS price, and it’s costly. The cheapest droplet in Digital Ocean is $5/month, and it’s (most likely) not good enough for your NLP model.

If you were in the same situation as above, we’re in the same boat! Well, Streamlit got you (and I) covered.

All code used in this article is available in my GitHub repository here.

What is Streamlit?

Streamlit is a platform where you can deploy, manage, and share your Python project with ease. Previously, Streamlit is “just” an open-source library that lets you transform a Python script into an interactive app. But now, with Streamlit Sharing, they enable us to deploy our interactive apps to their platform, and it’s completely free!

To register for their app, you don’t require to do complicated verification. They only need your name and your primary GitHub email. If you already have an idea about an app to deploy with Streamlit, you can let them know too in the form. I got mine under 24 hours and I fill out all available fields, so it might affect the chance of getting accepted.

Sign up for your Streamlit Sharing account here.

So, how do we deploy our app?

As I told you earlier, Streamlit is a library that allows us to transform a Python script into an interactive app. Therefore, if we want to deploy our app, we’re required to transform it into an app with their library.

To use their library, we must install it with pip:

pip install streamlitAfter transforming it, we can go to their website to deploy our application, then we can show it to the world. As their requirements, our application must be open-source in GitHub. I think they’re asking us to give back to the community since they already gave us (the community) free but very awesome service.

Let’s Jump Right Into It

Here, I’m going to use a BERT text classification model. It’s not the best model I can build since I’m showing you how to deploy to Streamlit, not how to fine-tune it 😛.

To deploy our app, we are required to have two things: one is the actual application where we will code our Streamlit app, and the other one is our predictor. I’ll walk you through the predictor first since it’s our closest friend 😛

Predictor

I have a fine-tuned model before and save it to a .h5. I choose .h5 because it’s easier to transfer over since it’s only (even though big) one file.

Here, in predictor.py, I have four functions that do the following:

- load_model(), as its name states, it’s to load the model. I saved my model in “model” folder under the name “model.h5”. Unfortunately, I (nor recommended) can’t upload a 2GB file to GitHub, therefore I added a script to download my model from Google Drive (line 46–55).

- cleanText(sentence), this to remove stop words from input text. We’re using PySastrawi to stem and remove stop words, then NLPretext to handle other various thing such as removing end of line and others.

- encodeText(sentence), this to tokenize text into word ids and its attention mask that BERT can understand. Before tokenizing it, I remove clean the text first with cleanText function.

- predict(model, input), this is pretty straightforward. We receive model and input text as parameters (I’ll talk about why we must pass the model later). In this function, we encode/tokenize it then give the encoded text to our model. When done, we return the probability of it being a clickbait from 0–100.

Do you realize that I have “@st.cache” right before my model function? Yep, as it says, it’s used to cache the model so we don’t need to reinitialize our model every single time it loads. It only runs at the first start of our application.

The model must be cached here since it takes time (like 4–5 seconds) to load them. Of course, we don’t want our end user to wait for that for every single request, right? 😄

Streamlit App

The Streamlit library is so painless that you only need to write 44 (or even fewer!) lines to get a beautiful app.

Streamlit already does the UI design out of the box. The upside is it’s so easy to get things set up. The downside is that you have the same layout as the other Streamlit app, that’s not a problem for me 😋

In this script, I loaded two functions from predictor.py and also Streamlit library. At first, I customize the page configuration such as the title and icon. This will change our app tab details to help our user identify which tab is which.

After that, I load the model using our load_model() function. This is called under st.spinner() so the user can understand that we’re now loading our model.

What will happen if we call load_model() without the st.spinner()? It’ll leave our page blanks for a few seconds until the model is loaded. This affects UX and should be avoided.

After the model loaded, I have a function called handle_text(text) with @st.cache called before it. Inside the function, it’ll call predict(model, text) then return the prediction result.

Remember that I told you earlier that we should pass our model? It’s because the load_model function and where we’re loading our model are in different files. Therefore we must pass the model to any function that requires to use of the model.

The rest are pretty straightforward. I put some text there just to give context, then have a text area to let the user input the news headline. In line 34, if the given text doesn’t equal to an empty string, then we’ll generate a prediction for it.

To start our app in the local environment, run this:

streamlit run your_app_file_name.pyFor example:

streamlit run app.py

There is one more …

I told you earlier that we only need two files (in this project) to get our Streamlit app up and running. There is one more, it’s the requirements.txt.

Streamlit uses this as the reference to install all libraries that you’re using. Therefore it is a must to have this prepared. In this project, for example, I have my requirements.txt as follows:

tensorflow

keras

transformers

numpy

PySastrawi

nlpretext

streamlitWe’re Ready To Deploy

All three files required to get our app up and running are now completed. Now let’s jump into the fun part, it’s deploying our application! Well, what are we waiting for? LET’S GOOO.

First of all, make sure you are invited to use Streamlit Sharing and already logged in 😁

Create Our New App

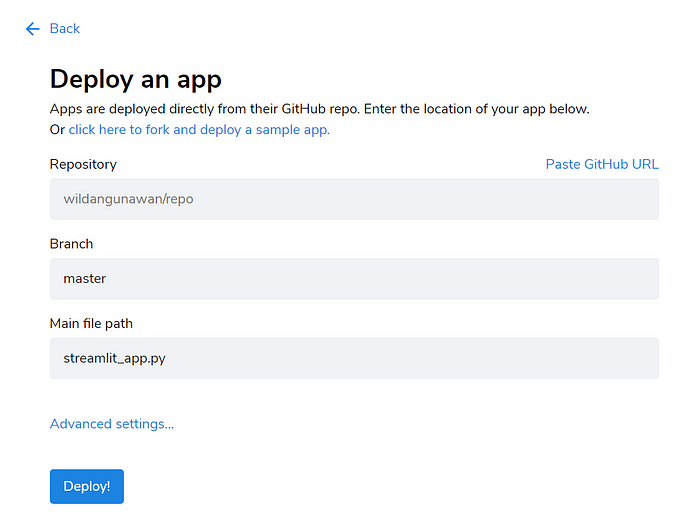

In your dashboard, click “New App” on the top-right hand corner. You now should see this:

Fill every field with the correct value. For now, we don’t need to touch the advanced settings yet, I’ll cover this in another article later. When all done, we can click the “Deploy!” now.

Sit back and relax. You might want to grab a cup of coffee while waiting for Streamlit to do their job.

If there is no error then you should see your application live now.

Go give it a try to your first Streamlit (or maybe your first BERT with Streamlit) app. You have done a very great work!

Sharing Our Application

OK, I know you can’t wait to share your application. It’s easy. On your current app page, grab the link in the address bar. That’s the link that you are going to share and show to the world.

Now you have done all steps and have your application up and running. What are you waiting for? Go out there and show the world your amazing app. Also, put down the link in the comment section below too 😆.

Go checkout my Streamlit application too here. All code I’m using here are uploaded in my GitHub repository here.

Update 4 Dec 22: My application may or may not working. I haven’t have time to look into it just yet. The implementation above should still work (maybe with some modification) though.

Recap

In this article, you have learned what is Streamlit, how to build a Streamlit interactive app, and how to deploy it to Streamlit. In the upcoming article, I will discuss how we can integrate Streamlit with another serverless service, Firebase by Google!

See you soon and stay healthy ❤️